[Scientific News】Dr. Yanjun Wei's group published an article in Journal of Speech Language and Hearing Research to reveal the joint role of audiovisual cross-modal integration and highly variable speech on Mandarin tone perception.

In November 2022, Dr. Yanjun Wei's group published a paper in the Journal of Speech Language and Hearing Research on "Visual-auditory integration and high-variability speech Integration and high-variability speech can facilitate Mandarin Chinese tone identification".

Previous studies have shown that combining Mandarin auditory tone with visual information (e.g., tone marks, gestures, etc.) that depict pitch contours can facilitate tone perception (hereinafter referred to as the "visual effect"). The present study further examined the cross-modal integration of audiovisual and high and low phonological variability to reveal the joint effect of both on pitch perception and the moderation of the visual effect by vocal tone language learning experience.

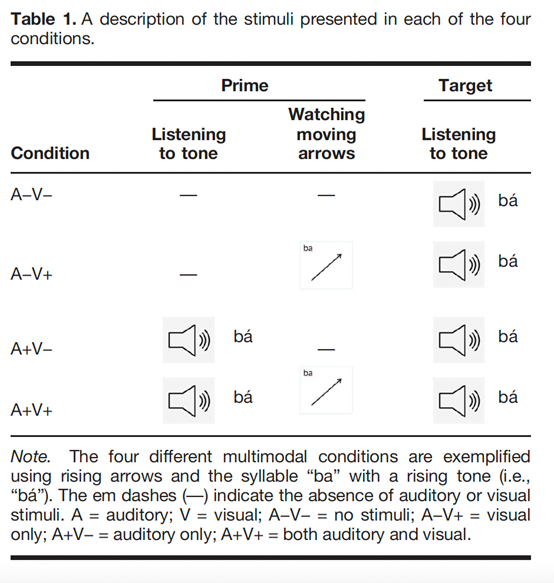

The present study consisted of three experiments with speakers without vocal tone language experience, elementary Mandarin second language learners, and native Chinese speakers as subjects, respectively. The subjects were asked to make fast and accurate pitch judgments based on whether the pitch was rising or falling, using a priming paradigm in which the auditory stimuli of Mandarin second and fourth tones were chosen as the target items. The visual information was presented using moving arrows as a symbolic means to depict the pitch contours of the tones, with rising arrows corresponding to the second tone and falling arrows to the fourth tone. The initiation term was presented in four different multimodal conditions (see Table 1): 1) visual stimuli only (A-V+); 2) auditory and visual stimuli (A+V+); 3) no stimuli (A-V-), as a control condition for A-V+; and (4) auditory stimuli only (A+V-), as a control condition for A+V+. The experiment also manipulated phonological variability for auditory stimuli, divided into high variability stimuli (pronouncers were three males and three females, each pronouncing the stimuli once) and low variability stimuli (pronouncers were one male, pronouncing the same stimuli six times). The accuracy and response time of tone discrimination were recorded and the d' value, which reflects the ability to discriminate tones, was calculated.

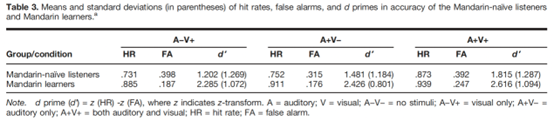

For speakers without any vocal tone language learning experience, the visual effects produced in the cross-modal integration condition (A+V+ vs. A+V-) were superior in accuracy to the uni-modal condition (A-V+ vs. A-V-) (see Table 2).A+V+ The higher d' than A+V- also illustrates this (see Table 3). Visual information served as a conceptual metaphor for auditory vocal tones, allowing auditory and visual to project onto a common representation, thus facilitating vocal tone perception. However, the response-time results did not show the above pattern. This may be due to the cognitive load that occurs when audio-visual information is integrated, requiring some processing time to complete cross-modal integration.

The visual effects on accuracy and reaction time in the unimodal condition were only seen in high-variant speech, but not in low-variant speech. The reasons for this are mainly the following: first, visual symbols are more stable and processable than fleeting auditory stimuli, thus leading listeners to pay more attention to imperceptible changes in tonal shape; second, visual symbols help listeners to give greater weight to inter-category differences in acoustic features of different tonal categories (e.g., tonal shape) in the process of tonal recognition, while ignoring intra-category acoustic differences (e.g. the presence of two visual symbols enables listeners to categorize a thousand different speech signals into two limited categories, effectively forming a many-to-one matching relationship between tone and form that is controlled within the human cognitive threshold.

Combining the results of the three experiments revealed that the less the vocal tone language learning experience, the stronger the visual effect. This is related to the degree of dissociation between the auditory and visual representations of Mandarin vocal tones. Native speakers shared the same representation of audiovisual information and processed auditory information without the aid of visual information; speakers without tonal language experience had not yet established an auditory representation of Mandarin tones and needed visual information to assist them; second language learners were in an intermediate transition state, processing simple tasks (low variance stimuli and correct rate results) without visual information and complex tasks (high variance stimuli and reaction time results) requiring integration of audiovisual modalities to facilitate the perception of auditory information.

In summary, our study reveals the advantages and disadvantages of audiovisual integration in tone perception, and the joint effects of cross-modal integration and high-variance speech on tone perception, shedding light on theories of phonological symbolization and categoryization of tone perception.

Dr. Yanjun Wei is the first and corresponding author of the paper, and Dr. Lin Jia from the Beijing Chinese Academy of Sciences, Dr. Fei Gao from the University of Macau, and Prof. Jianqin Wang from the Center for the Cognitive Science of Language at Beijing Language and Culture University are co-authors of the paper. This study is the result of a youth project of the Beijing Social Science Foundation. This study also received academic support from Professors Seth Wiener, Eric Thiessen, and Brian MacWhinney at Carnegie Mellon University and Professor Pedro Paz-Alonso at the Basque Center.

Paper information.

Wei, Y*. , Lin, J., Gao, F., & Wang, J. (2022). Visual-auditory integration and high-variability speech can facilitate Mandarin Chinese tone identification, Journal of Speech, Language and Hearing Research, 65(11): 4096-4111.